Mimi Onouha, Pulse, 2015. Copyrights: Mimi Onuoha

‘Go with the bias’- Examining prejudiced technologies

We tend to talk about digital technologies as if they are completely neutral and objective. But the opposite holds true. Big Bias, the first edition of the lecture series Coded Matter(s) of FIBER Festival, takes a closer look at multiple biases present in technology.

After a few years of absence FIBER Festival returns to De Brakke Grond with a new season of Coded Matter(s). This lecture series aims to reflect on the impact of digital technologies on society through the lens of the arts. This year’s theme is Worldbuilding, a theme that will be explored in three editions. The first of these editions focuses on the topic of big bias in digital technologies.

Moderator Tijmen Schep shows a Wiki page listing dozens of cognitive biases. Humans have always had biases and lots of them! But what about digital technologies? Can computer algorithms have biases too? And if so, how do these biases manifest themselves and how do they exert their effects on society? Through their artistic practices all three presenters reflect upon different forms of bias in big data.

First up is Brooklyn-based artist Mimi Onuoha who through her various artistic interventions examines the implications of the process of data collection and computational categorization. She is interested in what elements get abstracted or left out in the act of data collection and bringing these back to the foreground. The first work she discusses is the site-specific, one-time performance Pulse (2015) in which she strapped a heartbeat sensor around her waist and projected the collected data on the wall behind her in a room full of people. The work raises questions about what actually gets represented (and what not) when we visualize data and she soon discovers how people tend to read into it whatever they want, thus fetishizing the data. Ten minutes into the performance she receives a text message from the person she was dating at the time, who suddenly announced to be breaking up with her. This added a strange tension to the work since it introduced an element she desperately wanted to hide from others – her intimate moment of confusion and heartbreak – whilst at the same time publicly broadcasting her heartbeat data onto the wall for everyone present to observe.

Mimi Onuoha. Photography: Sebastiaan ter Burg

Mimi Onouha, Pathways, 2014. Copyrights: Mimi Onuoha

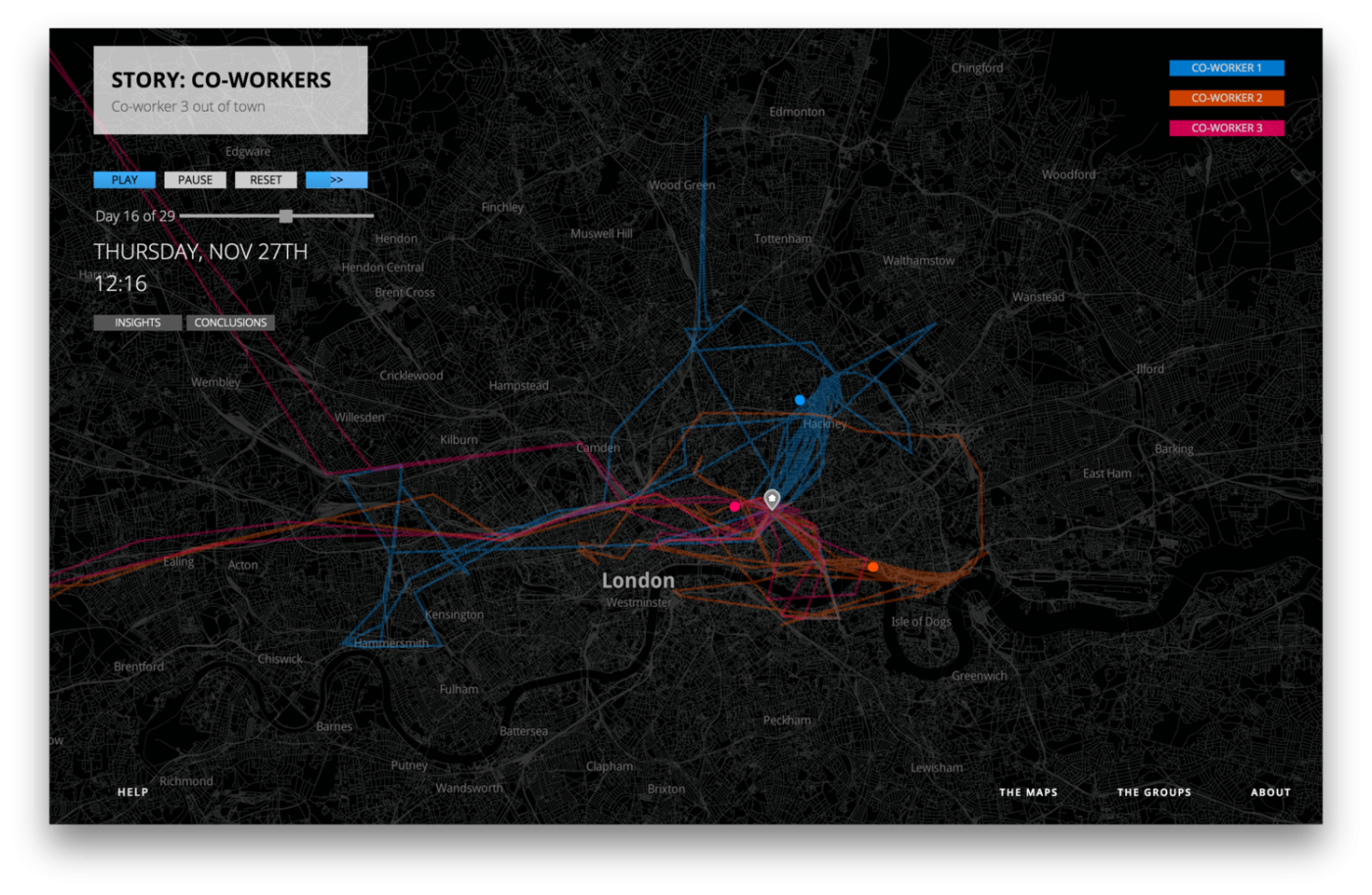

For another project, Pathways (2014), Onuoha collected the geolocation and message metadata of four groups of Londoners over the course of one month, which she then publicized on the National Geographic website in the form of animated digital maps and graphs. Yet, more important than these data visualizations are the relationships at play here. As Onuoha notes, in data collection there is always an entity that is out to collect data and an entity that has to give up data, a relationship that oftentimes gets abstracted. That’s why she insisted on meeting the people she monitors face-to-face and have them hand over their data physically, an act which consequently brought up issues of trust and possession.

After having enacted these various artistic interventions Onuoha now reflects on the patterns that underlie these “blind spots in data saturated spaces.” She points out four reasons why missing data sets exist. First off, those having the resources to collect data often lack the incentive, and vice versa. For example, for a long time data on the number of innocent civilians killed by the police were missing, because the ones with the resources to collect these data, i.e. the police, lacked the incentive to do so, while on the other hand the ones who did have an incentive to collect these data, i.e. human rights initiatives, lacked the appropriate resources. Secondly, sometimes the act of collecting costs more than it yields. A clear example of this aredata on sexual harassment. Going public with your story of sexual abuse is often more of a hassle than simply keeping quiet. Thirdly, some data resist metrification. Here, Onuoha refers to the statistics on cash and how the US government has no clue how many dollars circulate outside the US. And finally, at times there are advantages to data not existing. Someone who is situationally disadvantaged, for example, might greatly benefit from living under the radar.

Femke Snelting. Photography: Sebastiaan ter Burg

Femke Snelting, Tomography, 2018. Copyrights: Femke Snelting

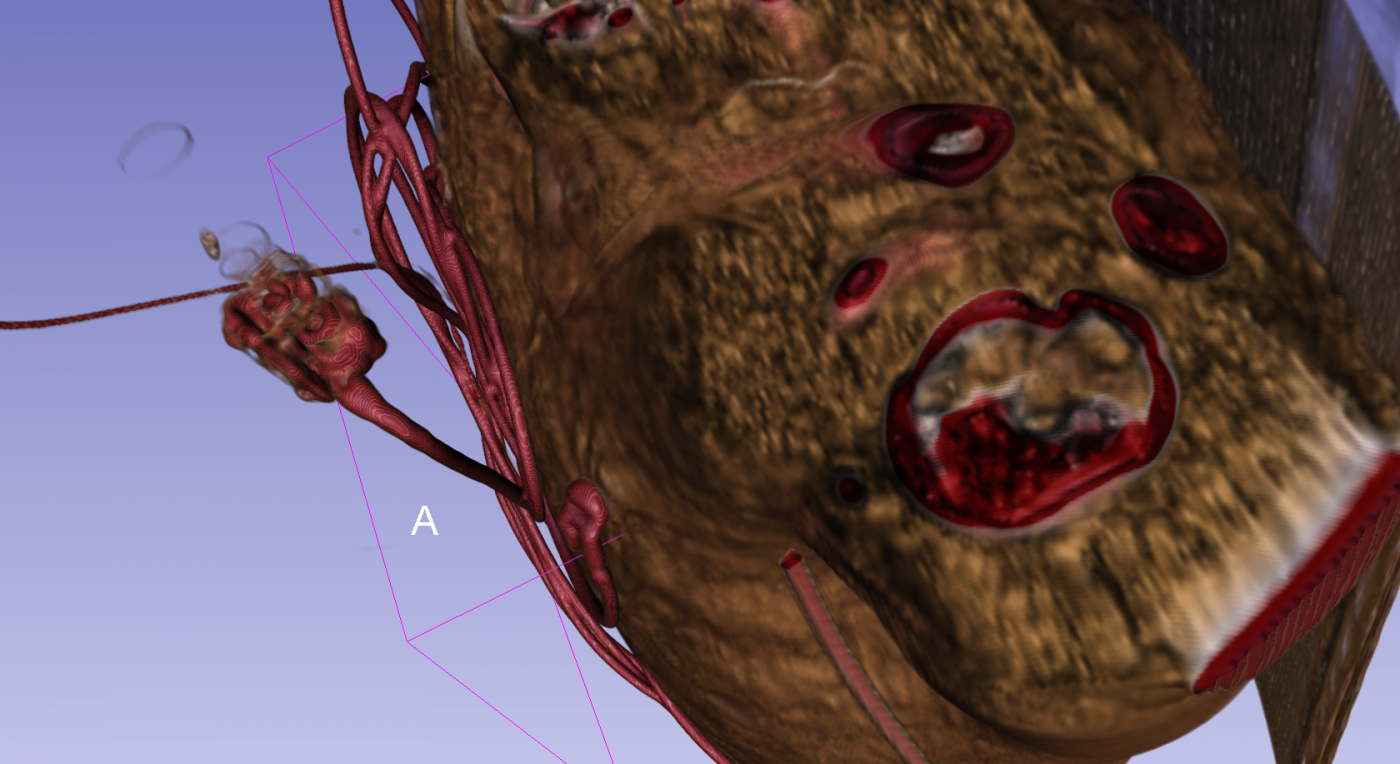

The second speaker of the evening is Femke Snelting, a graphic designer from Brussel who became fascinated with computer software. She presents part of a joined initiative with Jara Rocha called the Possible Bodies project in which they explore “the conditions of possibility for the presences of bodies to appear in 3D spaces.” While non-invasive biomedical imaging techniques like CT, PET and MRI (collectively referred to as tomography) are the state-of-the-art, they want to argue that issues relating to age-old dissection practices are prone to sneak back in through the back door.

For one, there’s the act of slicing (tomos meaning ‘slice’ in ancient Greek). In tomography 3D renderings of the body are build up out of stacks of 2D data graphs. The problem with this is as follows: while in a technique like endoscopy, in which a small camera is inserted into the body, the body will interact with the camera, co-producing whatever image is created, in tomography the body is considered a still image. The 3D data graphs, and subsequent data visualizations thereof, are completely cut of from the materiality of the body. The body is stripped of its agency and this opens up the door for normative preconceptions about the body to secretly reinscribe themselves.

Secondly, 3D visualizations of the body are required to fit the Cartesian space of 3D modelling, a space characterized by demarcations and classifications and gazed upon from a singular perspective. In this space hard cuts are made between the body and the environment and between the body’s constituent organs. Ambiguity is flushed out and once more the idea of a non-agential body reinstates itself, a body that can be invaded without consequence, segmented into clearly demarcated territories, and that is healthier because of it. This act of demarcation is evinced in the videoloop projected on the screen behind Snelting. On it an uncleaned 3D rendering of a human torso can be observed tilting slowly amidst a monochrome digital abyss. Parts of the scanning apparatus and the lifesupport are still clearly visible, elements that ordinarily get removed from the image.

Snelting is afraid that the rapid advancements of biomedical imaging technologies will shift the focus from considerations of the probable to the possible. As she sees it, this is where this type of worldbuilding gets problematic. In a world focused solely on optimization the space for thinking differently becomes marginalized. She argues that the way to go from here is not to be overly skeptical about scientific and technological innovations and the biases that inhere in them, nor, for that matter, to be overly optimistic. Instead, rephrasing feminist studies scholar Donna Haraway, she proposes to “go with the bias.” Invoking the term bias’s semantic relation to textile, she proposes to not cut with the grain, nor against it, but to cut diagonally, a mode of reworking the fabric that retains the material’s pliable and stretchable properties.

Zach Blas holding up a chunk of polycrystalline silicon. Photography: Sebastiaan ter Burg.

Zach Blas. Photography: Sebastiaan ter Burg

The evening is concluded with Zach Blas’s lecture-performance Metric Mysticism. For this presentation Blas delves into the lore of Silicon Valley and the ways in which data analytics companies appropriate mysticism and magic to transform big data into a contemporary materialization of the absolute. Blas centers his investigation on tech start-up Palantir Technologies, co-founded by Peter Thiel and Alex Karp, which specializes in applying big data analysis in aiding anti-terrorism. The company’s name is a direct reference to Tolkien’s Lord of the Ring series in which a palantir is a crystal ball used by the evil wizard Saruman to oversee the whole of Middle Earth. The act of scrying (or crystal ball gazing) has a longer tradition, however, tracing all the way back to the Middle Ages, and is often imagined as a means of looking into the future.

Yet, in Silicon Valley the aim is no longer to simply gaze at the future, but to preempt it. Big data analysis is used as a policing tool to anticipate crimes before they have even happened. According to Blas tech companies like Palantir Technologies go beyond mere data analysis and are in fact engaged in, what he refers to as, metric mysticism. In metric mysticism “any mathematical or scientific objectivism is recast as a kind of unwavering eternal truth and turns it into something metaphysical.” Data becomes the god of our times that can only be accessed through these technology platforms, turning tech entrepreneurs like Peter Thiel into metric mystics that exert control over our future.

Ethereal music starts playing in the auditorium of De Brakke Grond and Blaspulls out a little chunk of polycrystalline silicon. He proposes that, as an alternative to the assumed transparency and transcendentality of crystal ball gazing, we should instead gaze upon this resolutely material, fragile, finite and opaque piece of metal that is at the heart of so much of our computational technologies today, a gaze that does not permit us a glimpse of the future, but that promotes an awareness of the ultimately material conditions that constitute our historical present.

Coded Matter(s): Big Bias, FIBER Festival, De Brakke Grond, Amsterdam, 17.05.2018; next edition Terra Fiction, De Brakke Grond, Amsterdam, 27-09-2018; Zach Blas, The Objectivist Drug Party, MU, Eindhoven t/m 08.07.2018

Nim Goede

PhD candidate at Amsterdam School for Cultural Analysis studying Art & Neuroscience.